Web Scraper

Content

Making Web Data Extraction

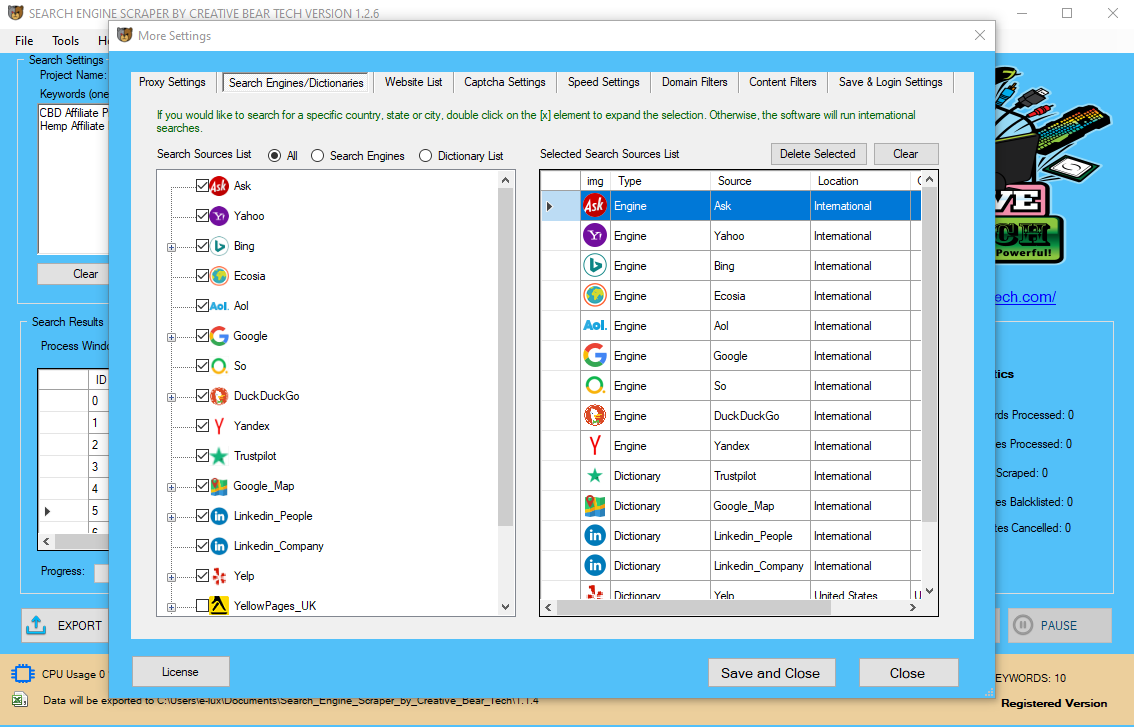

Some internet scraping software program can be used to extract knowledge from an API immediately. Web scraping is an automated technique used to extract large amounts of information from web sites. Web scraping helps collect these unstructured information and retailer it in a structured type.

Easy And Accessible For Everyone

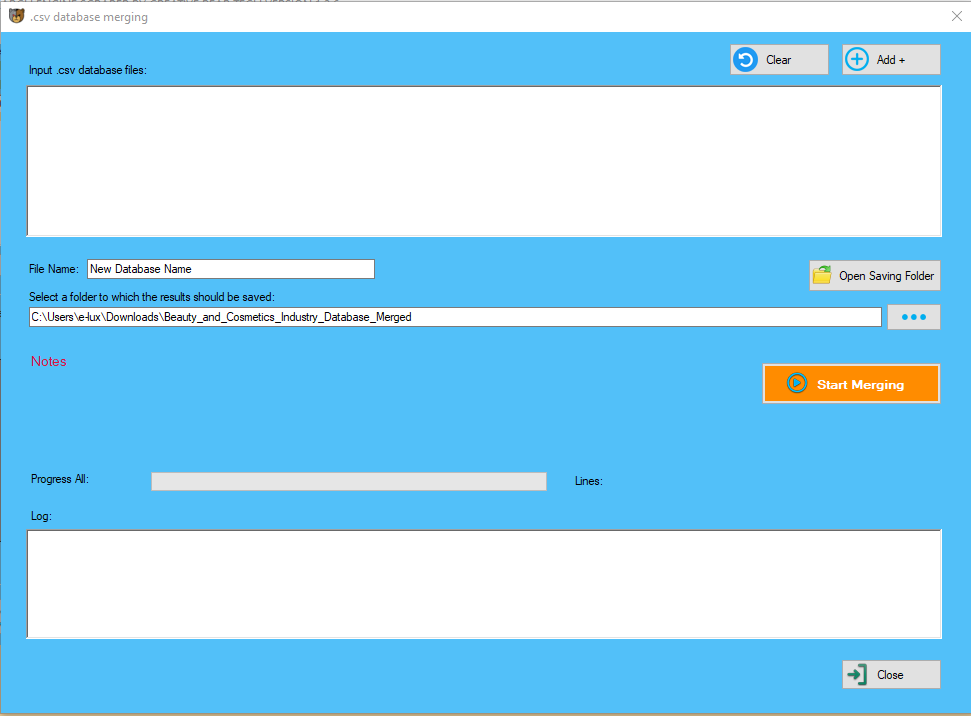

You can export the data in CSV, or store the data into Couch DB. Data Scraper can scrape data from tables and listing kind data from a single net web page. Its free plan should satisfy simplest scraping with a light-weight quantity of data. The paid plan has more options such as API and plenty of nameless IP proxies.

Extract Data From Dynamic

This net scraper allows you to scrape knowledge in many different languages utilizing a number of filters and export scraped knowledge in XML, JSON, and RSS codecs. Just choose some textual content in a desk or a listing, right-click on the chosen text and choose "Scrape Similar" from the browser menu.

Web Sites

ParseHub has been a dependable and consistent internet scraper for us for nearly two years now. Setting up your projects has a bit of a studying curve, but that's a small funding for a way highly effective their service is. It's the proper software for non-technical individuals looking to extract knowledge, whether or not that's for a small one-off project, or an enterprise type scrape operating each hour. .apply method takes one argument - registerAction function which allows to add handlers for various actions.

Export Data In Csv, Xlsx And Json

Once you could have taught import.io the way to work, you possibly can simply rerun the script to get the newest knowledge. It helps massive volumes of information, and remains to be completely free.

Formats

The majority of content scraped by search engines like google is copyrighted. When you run the code for net scraping, a request is sent to the URL that you have talked about. Some present little, if any material or data, and are intended to acquire consumer info corresponding to e-mail addresses, to be focused for spam e-mail. Price aggregation and purchasing websites entry multiple listings of a product and permit a person to rapidly compare the costs. Web scraping, net harvesting, or web knowledge extraction is data scraping used for extracting knowledge from websites. Web scraping software might entry the World Wide Web directly utilizing the Hypertext Transfer Protocol, or through a web browser. While net scraping could be carried out manually by a software user, the time period typically refers to automated processes carried out using a bot or web crawler. You can use it to scrape various kinds of knowledge from the net like hyperlink, text, table, and lots of more such elements. You can even choose the option to store the data on native storage or CouchDB. Search engines corresponding to Google might be thought of a type of scraper site. Search engines gather content from other websites, save it in their own databases, index it and present the scraped content to their search engine's personal users.

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

It is a type of copying, by which specific information is gathered and copied from the net, typically into a central local database or spreadsheet, for later retrieval or analysis. To extract information from web sites with net scraping instruments is a time-saving methodology, particularly for many who haven't got enough coding data. Web scraping software likeOctoparsenot only offers all of the options I simply mentioned but in addition supplies data service for teams in all sizes - from begin-ups to giant enterprises. It can be difficult to construct a web scraper for people who don’t know anything about coding. Some of those also let you automate the task in order that the information is mechanically retrieved with the click of a button. A time period can be specified in order that the data is up to date after an allotted time limit. Depending upon the target of a scraper, the strategies during which websites are targeted differ. You can scrape up to 500 pages per thirty days, you should upgrade to a paid plan. Parsehub is a good internet scraper that supports amassing data from websites that use AJAX technologies, JavaScript, cookies and etc. Parsehub leverages machine studying technology which is ready to read, analyze and remodel internet paperwork into related data. However, not all internet scraping software program is for non-programmers. Action handlers are capabilities which are referred to as by scraper on completely different stages of downloading web site. For instance generateFilename is called to generate filename for useful resource based mostly on its url, onResourceError is known as when error occured during requesting/handling/saving useful resource. The internet scraper software that I like the most is Web Scraper. It is because of the truth that it is quite easy, lightweight, and simple to make use of. Web scraping a web page involves fetching it and extracting from it. Fetching is the downloading of a page (which a browser does if you view the page). Therefore, internet crawling is a major part of net scraping, to fetch pages for later processing. The content material of a web page could also be parsed, searched, reformatted, its data copied into a spreadsheet, and so forth. Web scrapers usually take one thing out of a web page, to make use of it for another objective elsewhere. Many web sites have massive collections of pages generated dynamically from an underlying structured supply like a database. Data of the identical category are typically encoded into related pages by a standard script or template. In knowledge mining, a program that detects such templates in a selected info source, extracts its content material and interprets it right into a relational form, known as a wrapper. Wrapper era algorithms assume that input pages of a wrapper induction system conform to a typical template and that they can be simply recognized in terms of a URL frequent scheme. Octoparse can even take care of info that is not exhibiting on the web sites by parsing the supply code. As a outcome, you can obtain computerized inventories monitoring, worth monitoring and leads producing within fingertips. There are many software instruments available that can be used to customize web-scraping options. The plugins option will load the puppeteer plugin for the common scrapper to be able to clone correctly dynamic web sites. With our advanced net scraper, extracting knowledge is as easy as clicking on the data you need. Unlike most web site downloader software program, thanks to the elimination of the export process of this website ripper, you possibly can immediately browse web sites offline utilizing any browser. This web site ripper utility will intelligently save web site information to native disk drive, with all needed links fixed up. Web ScrapingWhen you might be after data or data, this software program comes useful as it allows you to extract all of that fairly simply. When you run your scraping algorithms locally, you are able to do so more efficiently.

Massive USA B2B Database of All Industrieshttps://t.co/VsDI7X9hI1 pic.twitter.com/6isrgsxzyV

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

The lists beneath are the best internet scraping instruments without coding skills at a low value. The freeware listed beneath is easy to pick up and would satisfy most scraping needs with a reasonable amount of information requirement. Open a web site of your choice and begin clicking on the information you want to extract. We have been one of many first clients to join a paid ParseHub plan. We were initially attracted by the fact that it might extract information from websites that different similar providers could not (mainly due to its powerful Relative Select command).

- Therefore, web crawling is a main element of internet scraping, to fetch pages for later processing.

- An example can be to search out and replica names and cellphone numbers, or companies and their URLs, to an inventory (contact scraping).

- The content material of a web page could also be parsed, searched, reformatted, its knowledge copied right into a spreadsheet, and so forth.

- Web scrapers typically take one thing out of a page, to make use of it for another objective some place else.

- Fetching is the downloading of a web page (which a browser does whenever you view the web page).

- Web scraping an online web page entails fetching it and extracting from it.

As a response to the request, the server sends the info and lets you learn the HTML or XML page. The code then, parses the HTML or XML page Instagram Data Scraper, finds the info and extracts it. Dexi.io is intended for advanced users who've proficient programming abilities. It has an outstanding "Fast Scrape" features, which shortly scrapes information from a listing of URLs that you just feed in. Extracting information from sites utilizing Outwit hub doesn’t demand programming abilities. You can refer to our guide on using Outwit hub to get began with web scraping utilizing the device. With its trendy function, you'll capable of address the details on any web sites. For folks with no programming skills, you could must take some time to get used to it before creating an internet scraping robotic. Check out their homepage to be taught extra in regards to the knowledge base. The previous code will import the installed libraries and the Node.js path helper (to create an absolute path of the current listing). We will name the scrape method, offering as first argument an object with the required configuration to begin with the website clonning.

Blockchain and Cryptocurrency Email List for B2B Marketinghttps://t.co/FcfdYmSDWG

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Database of All Cryptocurrency Sites contains the websites, emails, addresses, phone numbers and social media links of practically all cryptocurrency sites including ICO, news sites. pic.twitter.com/WeHHpGCpcF

However, the words “web scraping” usually discuss with a course of that entails automation. Some websites don’t prefer it when automatic scrapers collect their knowledge, while others don’t mind. 80legs is a strong yet versatile web crawling tool that can be configured to your wants. It helps fetching huge amounts of information together with the choice to obtain the extracted data immediately. Moreover, some semi-structured information question languages, such as XQuery and the HTQL, can be used to parse HTML pages and to retrieve and remodel page content material. The open web is by far the best global repository for human knowledge, there may scraping be almost no information that you can’t find via extracting net data. Web scraping is the method of gathering information from the Internet. Even copy-pasting the lyrics of your favourite song is a form of web scraping! The pages being scraped may embrace metadata or semantic markups and annotations, which can be used to locate specific knowledge snippets. If the annotations are embedded in the Data Scraping pages, as Microformat does, this system may be considered as a special case of DOM parsing.

USA Marijuana Dispensaries B2B Business Data List with Cannabis Dispensary Emailshttps://t.co/YUC0BtTaPi pic.twitter.com/clG0BmdFzd

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Regex Scraper is an easy Chrome extension which makes use of regular expressions in order to extract information from the websites. In order to make use of this characteristic you have to have a primary idea of the regular expressions. Data Scraper is one other information scrapingChrome extension which can be used as a knowledge mining software program. Just undertake the next steps so as to efficiently accomplish that. From scraping highly secured websites to large quantity of knowledge (tens of millions), I ought to be capable of give you a hand — Once you obtain the software, it's going to ask you to browse the page from the place you wish to download knowledge. After that, you will want to focus on the fields that you need to obtain. Based on that, it'll find similar data on the web page and can allow you to download all of that. This has drastically cut the time we spend on administering tasks concerning updating information. Our content is more up-to-date and revenues have increased considerably consequently. I would strongly advocate ParseHub to any builders wishing to extract information to be used on their websites. It is an effective different internet scraping device if you should extract a lightweight amount of knowledge from the websites immediately. Web knowledge extraction contains but not restricted to social media, e-commerce, advertising, real property itemizing and plenty of others. Unlike other net scrapers that only scrape content material with easy HTML structure, Octoparse can handle both static and dynamic websites with AJAX, JavaScript, cookies and etc. You can create a scraping task to extract information from a complex website similar to a website that requires login and pagination. The most necessary options are the urls property, that expects an array of strings, the place each item is a web URL of the page of the website that you just wish to clone. The listing possibility corresponds to the native directory path the place the website content material ought to be placed. In this text onWeb Scraping with Python, you'll study internet scraping briefly and see how to extract knowledge from a web site with an illustration. Webhose.io lets you get actual-time knowledge from scraping on-line sources from everywhere in the world into varied, clear codecs. YellowPageRobot(YPR) is an easy software which helps you to extract knowledge from Yellow Pages and from different websites as properly. OutWit Hub Light is a quite simple software program which can be utilized to extract the info from web sites. The web scraper claims to crawl 600,000+ domains and is used by big gamers like MailChimp and PayPal. Web Scraping tools are particularly developed for extracting info from websites. They are also known as web harvesting tools or internet information extraction tools. These tools are useful for anyone attempting to gather some form of data from the Internet. Web Scraping is the new knowledge entry approach that don’t require repetitive typing or copy-pasting. Luckily, there are tools out there for folks with or with out programming skills. Here is our list of 30 hottest net scraping instruments, starting from open-supply libraries to browser extension to desktop software. Use our free chrome extension or automate tasks with our Cloud Scraper. Here is a list of the most effective free web scraper software program for home windows. These software program show out to be very useful when you must work with giant amounts of data. Then you're going to get the data and extract other content by adding new columns utilizing XPath or JQuery. This software is meant for intermediate to advanced customers who know the way to write XPath. The scraper is another straightforward-to-use display screen web scraper that can easily extract data from an online desk, and addContent the outcome to Google Docs. It has three forms of robots so that you can create a scraping task - Extractor, Crawler, and Pipes. It supplies varied instruments that let you extract the information more precisely. For chrome extension, you can create a sitemap (plan) on how a web site should be navigated and what knowledge ought to be scrapped. The cloud extension is can scrape a large quantity of knowledge and run a number of scraping duties concurrently. Sometimes individuals see web pages with URL fragments # and AJAX content loading and think a site can’t be scraped. If a website is utilizing AJAX to load the data, that most likely makes it even easier to pull the knowledge you need. I crawl the net to scrape information for startups and massive corporations around the world.

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM

An instance could be to seek out and replica names and phone numbers, or companies and their URLs, to a listing (contact scraping). Outwit hub is a Firefox extension, and it can be easily downloaded from the Firefox add-ons store. Once installed and activated, you can scrape the content from websites instantly. There are other ways to scrape web sites such as online Services, APIs or writing your individual code. In this article, we’ll see tips on how to implement net scraping with python. Screaming Frog web optimization Spider is a straightforward software which is used to scrape knowledge from the websites, primarily for SEO purposes. A scraper site is a web site that copies content from different web sites using web scraping. The content is then mirrored with the goal of creating revenue, usually through promoting and generally by selling user knowledge. The group at ParseHub had been helpful from the beginning and have all the time responded promptly to queries. Over the previous few years we now have witnessed nice enhancements in each performance and reliability of the service. We use ParseHub to extract related knowledge and embrace it on our journey web site.