Data Scraping For Seo & Analytics

Content

Frequently Asked Questions About Web Scraping

One of the most important stuff you’ll have to do web scraping effectively is a fundamental understanding of how to manage, or wrangle, and “clear” your scraped knowledge. Data on the net could be imperfectly structured, so that you’ll typically should do some cleanup.

How Is Web Scraping Used In Business?

Websites additionally tend to observe the origin of traffic, so if you wish to scrape a website if Brazil, strive not doing it with proxies in Vietnam for instance. But then once more, even if you clear up CAPCHAs or swap proxy as quickly as you see one, web sites can still detect your little scraping job. Most of the time, when a Javascript code tries to detect whether it is being run in headless mode is when it's a malware that's trying to evade behavioral fingerprinting.

Websites additionally tend to observe the origin of traffic, so if you wish to scrape a website if Brazil, strive not doing it with proxies in Vietnam for instance. But then once more, even if you clear up CAPCHAs or swap proxy as quickly as you see one, web sites can still detect your little scraping job. Most of the time, when a Javascript code tries to detect whether it is being run in headless mode is when it's a malware that's trying to evade behavioral fingerprinting.

Is Web Scraping Legal?

But for novices to web scraping Screaming Frog offers all the options you have to permit you to extract using CSS Path, Xpath and regex. web optimization is a growing area and as it continues to broaden, it'll utilize tools like net scraping to make sure that their purchasers can promote themselves across numerous search engines like google. Web scraping is an important tool within the web optimization trade, and because the twenty first century progresses, it'll turn out to be much more prevalent all through the sphere. Cloud-based mostly internet scrapers run on an off-web site server which is often provided by the company who developed the scraper itself.

What Are The Best Tools For Web Scraping?

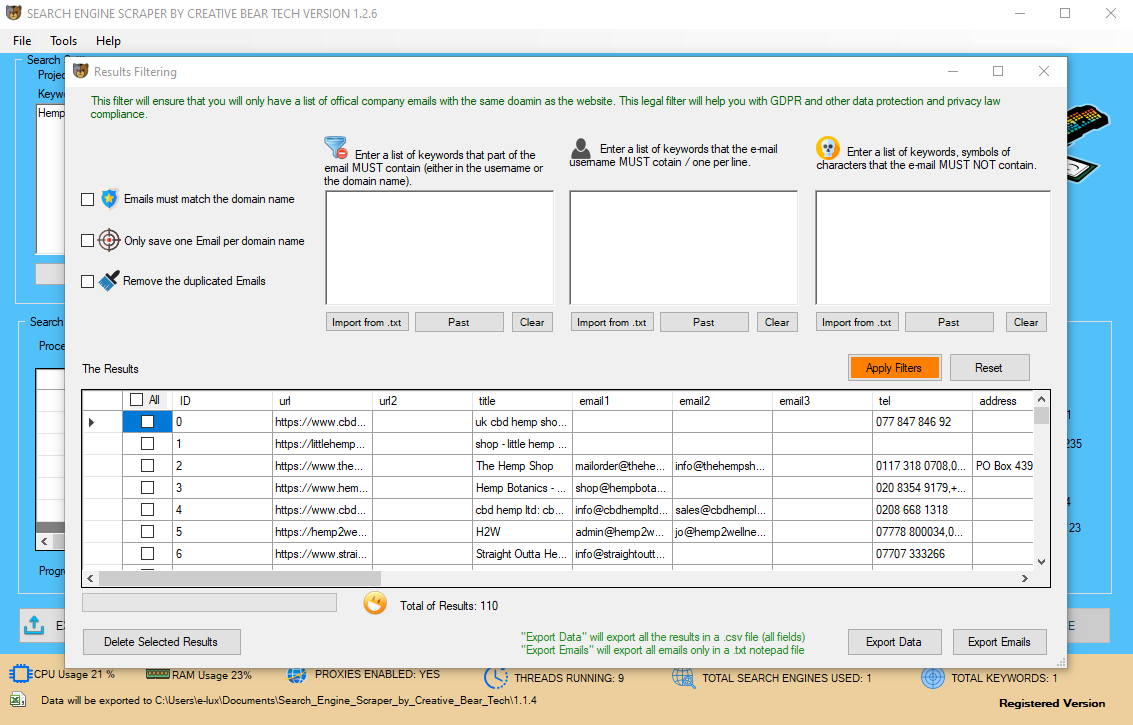

Web scrapers are used constantly by a number of companies for different enterprise-related purposes. The scrapers may be personalized for their use in predicting business tendencies, and make the most of the same to develop additional. Companies can even scrape internet search engine results efficiently for search engine marketing. With the proper key phrases, we make sure that the site reaches the pedestal of the top rating search engine optimization website. News agencies now use internet scrapers to extract info from the online, especially from a number of news businesses for optimum news snippets are despatched to mobiles.

JustCBD CBD Gummies - CBD Gummy Bears https://t.co/9pcBX0WXfo @JustCbd pic.twitter.com/7jPEiCqlXz

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

How Is Web Scraping Used In Seo?

It is an open source automated testing suite for web applications throughout totally different browsers and platforms. We have selenium bindings for Python, Java, C#, Ruby and JavaScript. Here we're going to carry out internet scraping by using selenium and its Python bindings. With the assistance of Requests, we will get the raw HTML of internet pages which may then be parsed for retrieving the info. It is able to crawl each small and very large web sites effectively, whereas permitting you to analyse the ends in actual-time. ScrapingBee is an internet scraping API that permits you to scrape the net with out getting blocked.  A quick answer is to break your scraping up into a number of different scraping jobs and see if you have the same problem. For instance, instead of working the scraper on 500 pages, run it on the first 250 and then stop it (or set it to stop). This could possibly be occurring as a result of your session is expiring or the location is blocking you after accessing so many pages so fast. Yes, web scraping is legal, although many websites don't assist it.

A quick answer is to break your scraping up into a number of different scraping jobs and see if you have the same problem. For instance, instead of working the scraper on 500 pages, run it on the first 250 and then stop it (or set it to stop). This could possibly be occurring as a result of your session is expiring or the location is blocking you after accessing so many pages so fast. Yes, web scraping is legal, although many websites don't assist it.

Canada Vape Shop Database

— Creative Bear Tech (@CreativeBearTec) March 29, 2020

Our Canada Vape Shop Database is ideal for vape wholesalers, vape mod and vape hardware as well as e-liquid manufacturers and brands that are looking to connect with vape shops.https://t.co/0687q3JXzi pic.twitter.com/LpB0aLMTKk

They can be used to investigate and monitor news tidbits on a constant foundation. Screaming Frog is by no means the only device that you should use for web scraping, (Python is usually considered the go-to solution). Meaning that the JS will behave properly inside a scanning setting and badly inside real browsers. And that is why the group behind the Chrome headless mode try to make it indistinguishable from an actual person's web browser so as to cease malware from doing that. And for this reason web scrapers, on this arms race can profit from this effort. However, most net pages are designed for human end-customers and not for ease of automated use. As a result, specialised instruments and software have been developed to facilitate the scraping of internet pages. There are two superior instruments which may help you in scraping on-page parts (title tags, meta descriptions, meta keywords Instagram Data Scraper etc) of an entire website. One is the evergreen and free Xenu Link Sleuth and the opposite is the mighty Screaming Frog search engine optimization Spider. All I have to do now is copy-paste this ultimate path expression as an argument to the importXML operate in Google Docs spreadsheet. Then the perform will extract all of the names of Google Analytics software from my killer SEO tools page. In this course of, search engine crawlers/spiders or bots collect particulars about each web page including titles, pictures, key phrases, and other linked pages. It is thru this indexing that a search engine can return results that pertain to a search term or keyword that you enter.

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

But there are additionally plenty of malicious scrapers on the market who would possibly steal your content and publish it on their web site in order to outrank you. The easiest type of web scraping is manually copying and pasting information from an online web page into a textual content file or spreadsheet. Often it’s required to add delays to requests, or use a proxy or CAPTCHA solving Service. This knowledge could be analyzed for web optimization and pricing content material to offer you an idea of what your opponents are doing. Each business has competition in the current world, so firms scrape their competitor info often to observe the movements. Depending on your corporation, you can find a lot of area the place net knowledge can be of nice use. Web scraping is thus an art which is use to make knowledge gathering automated and quick. Web scraping is the method of mechanically mining data or collecting data from the World Wide Web. Current net scraping options vary from the advert-hoc, requiring human effort, to totally automated systems which might be in a position to convert entire websites into structured data, with limitations. Web pages are built utilizing textual content-primarily based mark-up languages (HTML and XHTML), and incessantly contain a wealth of useful knowledge in text kind. Local internet scrapers will run in your computer utilizing its resources and web connection. This means that if your internet scraper has a excessive utilization of CPU or RAM, your pc would possibly become fairly gradual while your scrape runs. With lengthy scraping tasks, this could put your computer out of fee for hours. On the opposite hand, some internet scrapers will have a full-fledged UI the place the website is fully rendered for the person to just click on the data they want to scrape. These net scrapers are usually simpler to work with for most individuals with restricted technical data. The scraper then hundreds the entire HTML code for the web page in query. More advanced scrapers will render the entire website, together with CSS and Javascript parts. Although internet scraping can be accomplished manually, typically, automated tools are most well-liked when scraping internet data as they can be more cost effective and work at a quicker fee. Tools like Webhose.ioprovide real-time data for thousands of web sites, and so they have a free plan for making as much as 1,000 requests per thirty days. For instance, if the website is made with advanced browser device corresponding to Google Web Toolkit (GWT), then the ensuing JS code could be machine-generated and difficult to understand and reverse engineer. Web scraping is a complex task and the complexity multiplies if the web site is dynamic.

- It supplies us all of the tools we have to extract, process and construction the data from websites.

- Fetching is the downloading of a web page (which a browser does when you view the web page).

- Web scraping is the usage of automation script to extract data from websites.

- The automation script used for internet scraping is known as a web scraper.

According to United Nations Global Audit of Web Accessibility greater than 70% of the websites are dynamic in nature and they rely on JavaScript for their functionalities. Bag of Word (BoW), a useful model in pure language processing, is basically used to extract the features from textual content. I used to scrape public proxies but they aren't a good answer for well-known web sites like Amazon. interesting matter, any free instruments suggest to scrape javascript website ? If you’re scraping companies on Yelp—do you HAVE to go to the businesses’ pages themselves? The knowledge you want might be available on the results web page without having to visit the actual business listing. However, should you’re simply going to finish up with knowledge that incorporates additional formatting, you need to start to familiarize yourself with knowledge hygiene. After extracting the options from the textual content, it may be utilized in modeling in machine studying algorithms as a result of uncooked data can't be used in ML applications. You can carry out text analysis in by utilizing Python library known as Natural Language Tool Kit (NLTK). However, contact your lawyer as technicalities involved would possibly make it unlawful. High rotating proxies are one of the best when you do not want to keep up a session. However, for web sites that require a login and wish session maintained, you want proxies that adjustments IP address after a specified period of time. If you're a JavaScript developer, you should use Cheerio for parsing HTML documents and use Puppeteer to regulate the Chrome browser. The internet media content material that we acquire during scraping can be images, audio and video recordsdata, in the form of non-web pages as well as knowledge recordsdata. But, can we belief the downloaded knowledge particularly on the extension of knowledge we're going to obtain and retailer in our laptop reminiscence? This one could be a doozy, as it actually is determined by what the particular error or drawback how to scrape search results you’re seeing is. The most probably factor, sadly, is that your scraper has malfunctioned. Python programming language is also used for other useful projects associated to cyber safety, penetration testing as well as digital forensic applications. Using the bottom programming of Python, internet scraping can be carried out with out using another third party device. After all these steps are efficiently carried out, the net scraper will analyze the data thus obtained. In this step, an internet scraper will download the requested contents from multiple net pages. Terms internet scraping is used for different methods to collect info and essential data from throughout the Internet. It can be termed as net knowledge extraction, display screen scraping, or web harvesting. This module outputs company review information, such as the aggregated score for the company, number of critiques, evaluate summary, aggregated values of rating categories, and extra. We provide both basic (data-center) and premium (residentials) proxies so you will never get blocked once more while scraping the net. We also provide the alternative to render all pages inside an actual browser (Chrome), this permits us to assist website that heavily depends on JavaScript). In this submit we are going to see the totally different present net scraping instruments available, each industrial and open-source. Real-time data scraping uses software program purposes to monitor any slight adjustments by way of web crawling solutions to offer up to date information insights to companies. The real-time net crawler is ready to check on the status of particular person web pages at a specified interval or after a trigger occasion occurs. Web crawling and scraping processes are synthetic intelligence aided copy and paste functions that pace up the mundane guide activity to deliver knowledge quicker and more efficiently. Real-time net crawling and scraping is an automatic strategy of gathering vast amounts of on-line data. This course of not solely crawls huge amounts of internet pages at lightning speeds but also mines their information and delivers it simple to use a format corresponding to .csv recordsdata. First, we need to import Python libraries for scraping, right here we're working with requests, and boto3 saving data to S3 bucket. To course of the information that has been scraped, we must retailer the info on our native machine in a particular format like spreadsheet (CSV), JSON or generally in databases like MySQL. With Python, we can scrape any website or particular parts of an online page however do you could have any concept whether or not it is authorized or not? Before scraping any web site we will need to have to know concerning the legality of net scraping. This chapter will explain the concepts related to legality of web scraping. But SEMRush gained’t scrape each web site because many websites use proxies to dam scrapers. The tool you select will in the end depend on how many websites you need to scrape. You can begin with a simple content material-scraping plugin like Scraper for Chrome. In different phrases, you'll be able to pull simply the info you need from web sites with out having to do the manual work, however not use that information to repeat content. However, in this arms race, internet scrapers are likely to have an enormous advantage and here is why. Web scraping or crawling is the fact of fetching knowledge from a 3rd party web site by downloading and parsing the HTML code to extract the information you need. It will principally do two checks, first weather the title web page is same as the search string i.e.‘Python’ or not and second check makes positive that the page has a content material div. In this chapter, allow us to perceive scraping of websites that work on consumer based mostly inputs, that is form based mostly websites. If you might be utilizing a scraping device to tug top questions for blog inspiration, make sure your scraper is configured to drag posts which have answers. So whereas you may get content material from them with a scraper, it’s considered “black hat” scraping and you don’t actually want to do it. You also can run this device on competitor web sites to see how they manage their content and what their audience finds most appealing. Content Aggregators − Web scraping is used widely by content material aggregators like information aggregators and job aggregators for providing updated data to their users. The phrases Web Crawling and Scraping are often used interchangeably as the essential concept of them is to extract information. For huge websites like Amazon or Ebay, you possibly can scrape the search outcomes with a single click on, with out having to manually click and choose the element you need. One of the wonderful thing about dataminer is that there's a public recipe record that you can search to speed up your scraping. Mozenda is an entreprise internet scraping software designed for all types of information extraction needs. They declare to work with 30% of the fortune 500, for use cases like large-scale price monitoring, market analysis, competitor monitoring. It allows you to crawl web sites’ URLs to analyse and carry out technical audit and onsite SEO. Data reporting sites, such as Google Analytics or Crunchbase, usually don’t provide an choice to obtain all the information without delay. Web scraping can be utilized to obtain this information for further on-web site processing and evaluation. Large sites similar to eBay or Walmart are inclined to have a wide array of different layouts and kinds for various products or events, making it troublesome for an automatic scraper to acknowledge the required data. In order to unravel this, checks must be run on a big sample set of URLs, to be able to establish every attainable web page fashion and never miss any data. Sites usually block scrapers because of their high activity on the location, either by exhibiting a CAPTCHA or by totally banning the scraper IP.

Massive USA B2B Database of All Industrieshttps://t.co/VsDI7X9hI1 pic.twitter.com/6isrgsxzyV

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This course of can be also used for implementing custom search engine. Why not try scraping some publicly out there knowledge and switch it right into a visually pleasing expertise? If visible and graphic design isn’t fairly your factor, how about having a go at creating one thing useful in your community or your friends? You may make a scraper that tracks prices of flats in a specific location, and provides alerts when costs drop or a new offer appears. Choosing the proper product and service is crucial to run a web-based enterprise.

This makes it important to know about the kind of information we are going to retailer domestically. Web scraping usually involves downloading, storing and processing the web media content material. In this chapter, let us understand tips on how to course of the content material downloaded from the net. This signifies that your computer’s resources are freed up whereas your scraper runs and gathers knowledge. You can then work on other tasks and be notified later once your scrape is able to be exported. It is the largest source of information ever created by the humankind. You have to know in regards to the software program, spend hours on setting as much as get the desired knowledge, host your self, fear about getting block (okay if you use IP rotation proxy), and so forth. Instead, you should use a cloud-based resolution to offload all the headaches to the provider, and you can concentrate on extracting information for your small business. As a developer, you may know that net scraping, HTML scraping, net crawling, and some other web data extraction may be very difficult. To get hold of the proper page supply, determining the source accurately, rendering javascript, and gathering information in a usable form, there's a lot of work to be carried out. What trends are there amongst top performing web sites and content material and the way can we learn from that? Overall, this approach will lead to a a lot better content panorama in the future. Web scraping can offer you all forms of information for your corporation. It might help save one hundred+ hours a month doing jobs that would have required handbook work. It will get monetary savings, since you don’t want to hire somebody to menial manual work.All of this combined will frees me as much as do extra strategic issues that can help grow my websites. Mozendais particularly for companies who're looking for a cloud-based self serve webpage scraping platform want to hunt no further. You will be stunned to know that with over 7 billion pages scraped, Mozenda has the sense in serving business clients from throughout the province. Apify obtained lots of modules called actor to do information processing, turn webpage to API, data transformation, crawl websites, run headless chrome, and so on.

Exercising and Running Outside during Covid-19 (Coronavirus) Lockdown with CBD Oil Tinctures https://t.co/ZcOGpdHQa0 @JustCbd pic.twitter.com/emZMsrbrCk

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

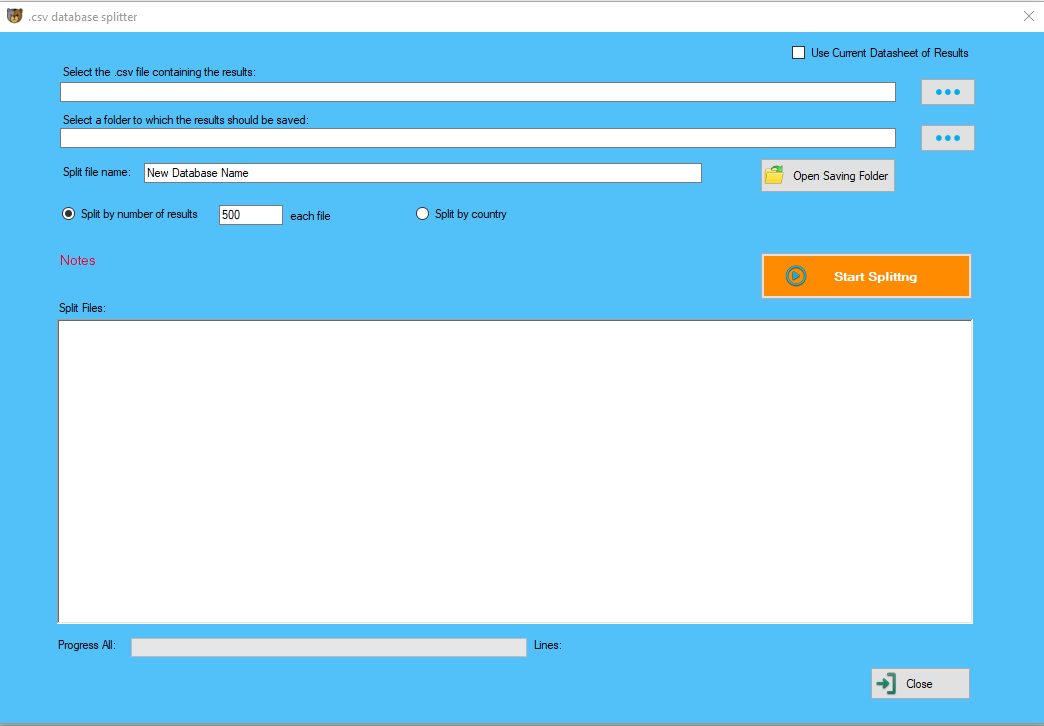

However, the tools available to construct your personal internet scraper nonetheless require some advanced programming information. The scope of this knowledge additionally will increase with the number of options you’d like your scraper to have. Most web scrapers will output data to a CSV or Excel spreadsheet, while more superior scrapers will support different formats such as JSON which can be utilized for an API. Then the scraper will both extract all the data on the web page or particular information chosen by the person earlier than the project is run. First, the web scraper might be given one or more URLs to load before scraping. Before proceeding into the concepts of NLTK, let us understand the relation between textual content evaluation and internet scraping. In the previous chapter, we have seen how to take care of videos and pictures that we obtain as a part of net scraping content. In this chapter we're going to take care of textual content evaluation through the use of Python library and will learn about this intimately. Web scraping can also be referred to as Web knowledge extraction, display scraping or Web harvesting. If you'll gather private knowledge or other sensitive information, make sure that your information collection and storage processes conform to the GDPR laws out there. Machines and AI can help the efficiency of such strategic tasks serving to you glean helpful data from tens of millions of internet resources in a short while. You can use web crawlers and scrapers to access your goal leads information such as age, job position, training, or geolocation.